Meta-SGD: Learning to Learn Quickly for Few-Shot Learning

Jul 31, 2017 · Abstract page for arXiv paper 1707.09835: Meta-SGD: Learning to Learn Quickly for Few-Shot Learning. ... [Submitted on 31 Jul 2017 , last revised 28 Sep 2017 (this version, …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning

Meta-SGD: Learning to Learn Quickly ... 0.31 ± 0.05 plus-or-minus 0 ... arXiv preprint arXiv:1703.03400, 2017. Graves et al. [2014] Alex Graves, Greg Wayne, and Ivo Danihelka. …

GitHub - foolyc/Meta-SGD: Meta-SGD experiment on Omniglot ...

Meta-SGD(Meta-SGD: Learning to Learn Quickly for Few Shot Learning(Zhenguo Li et al.)) experiment on Omniglot classification compared with MAML(Model-Agnostic Meta-Learning …

Meta-SGD: Learning to Learn Quickly for Few Shot Learning Zhenguo Li Fengwei Zhou Fei Chen Hang Li Huawei Noah’s Ark Lab {li.zhenguo, zhou.fengwei, chenfei100, …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning

Jul 31, 2017 · In contrast, meta-learning learns from many related tasks a meta-learner that can learn a new task more accurately and faster with fewer examples, where the choice of meta …

Meta-SGD: Learning to Learn Quickly for Few Shot Learning.

Few-shot learning is challenging for learning algorithms that learn each task in isolation and from scratch. In contrast, meta-learning learns from many related tasks a meta-learner that can …

"Meta-SGD: Learning to Learn Quickly for Few Shot Learning."

Jul 5, 2022 · "Meta-SGD: Learning to Learn Quickly for Few Shot Learning." help us. How can I correct errors in dblp? contact dblp; Zhenguo Li et al. (2017) ... DOI: — access: open. type: …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning,arXiv …

Few-shot learning is challenging for learning algorithms that learn each task in isolation and from scratch. In contrast, meta-learning learns from many related tasks a meta-learner that can …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning Zhenguo Li Fengwei Zhou Fei Chen Hang Li Huawei Noah’s Ark Lab {li.zhenguo, zhou.fengwei, chenfei100, …

GitHub - jik0730/Meta-SGD-pytorch: Neat implementation of Meta-SGD …

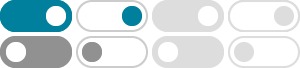

The only difference compared to MAML is to parametrize task learning rate in vector form when meta-training. As the authors said, we could see fast convergence and higher performance …

1707.09835 - Meta-SGD: Learning to Learn Quickly for Few-Shot Learning

Analyze arXiv paper 1707.09835. Few-shot learning is challenging for learning algorithms that learn each task in isolation and from scratch. In contrast, meta-learning learns from many …

Welcome to Zhenguo Li's Homepage - Columbia University

Federated Meta-Learning for Recommendation, arXiv 22 Feb 2018. Fei Chen, Zhenhua Dong, Zhenguo Li, Xiuqiang He. Deep Meta-Learning: Learning to Learn in the Concept Space, arXiv …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning

#1 Meta-SGD: Learning to Learn Quickly for Few-Shot Learning [PDF] [Kimi 1]. Authors: Zhenguo Li, Fengwei Zhou, Fei Chen, Hang Li. Few-shot learning is challenging for learning algorithms …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning . Few-shot learning is challenging for learning algorithms that learn each task in isolation and from scratch. In contrast, meta …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning

In contrast, meta-learning learns from many related tasks a meta-learner that can learn a new task more accurately and faster with fewer examples, where the choice of meta-learners is …

63days/Meta-SGD: Meta-SGD: Pytorch Implementation - GitHub

Meta-SGD: Pytorch Implementation. Contribute to 63days/Meta-SGD development by creating an account on GitHub. Meta-SGD: Pytorch Implementation. Contribute to 63days/Meta-SGD …

[1707.03141] A Simple Neural Attentive Meta-Learner - arXiv.org

Jul 11, 2017 · Deep neural networks excel in regimes with large amounts of data, but tend to struggle when data is scarce or when they need to adapt quickly to changes in the task. In …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning归 …

一句话就能概括完, MAML外循环只更新θ, Meta-SGD外循环也把内循环用的学习率α也一起更新了, 更新方式和θ一模一样(都是同一个loss函数). 这里α不再是一个数, 而是和θ同大小的张量, 意思 …

Meta-SGD: Learning to Learn Quickly for Few-Shot Learning

Article “Meta-SGD: Learning to Learn Quickly for Few-Shot Learning” Detailed information of the J-GLOBAL is a service based on the concept of Linking, Expanding, and Sparking, linking …